AI Pedagogical Agent

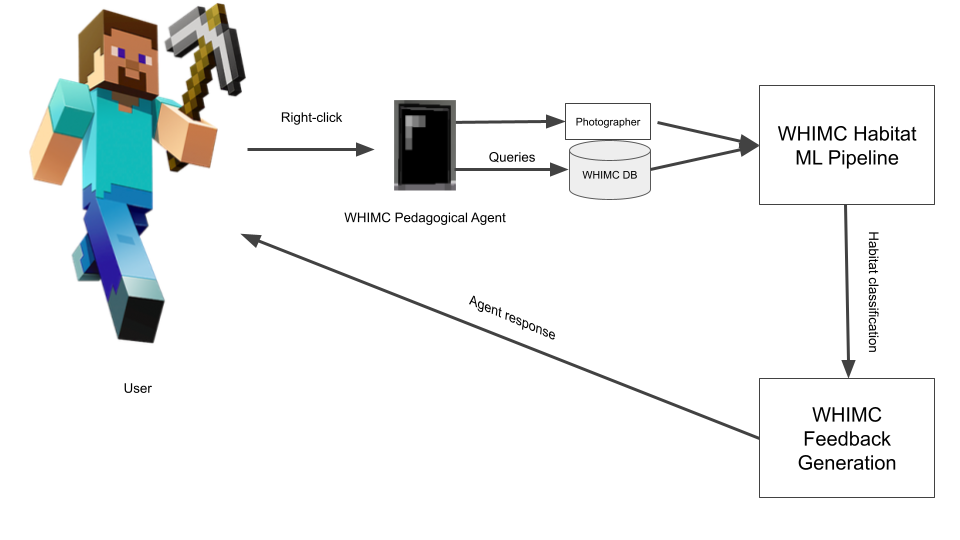

One goal of the WHIMC project is to provide students with pedagogical agents, virtual teaching assistants that can provide individualized support. To promote engagement and foster science interest without changing typical Minecraft gameplay and taking away student agency in exploration, agents are designed as expert observers that follow players and assist when needed. There are currently 6 dialogue options for students to choose from by right clicking on their personal helper: edit, dialogue, guidance, progress, reflection, and tag. These tools utilize a variety of machine learning and non-machine learning techniques to provide individual feedback based on the student’s needs.

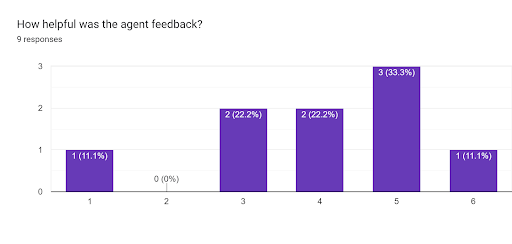

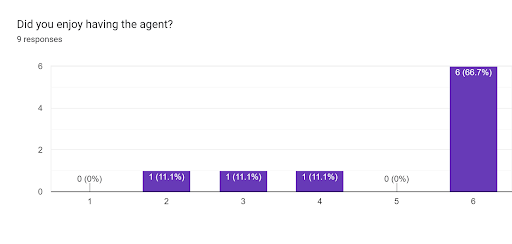

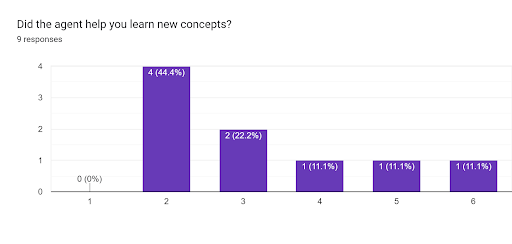

From a survey at our latest camp, students found the feedback helpful and enjoyed having the agent. They, however, did not find that the agent was useful in aiding in scientific instruction. Graphs from the 6-point Likert-scale surveys are shown below (1 = not very much, 6 = very much so):

Guidance

Students on our server can ask their agents for directions to popular locations on their current map. The agent utilizes a plugin called Journey, which is being designed and improved by a former researcher on the project. Journey is best described as an immersive alternative to teleportation in a multi-world server. To get from place-to-place quickly in Minecraft, players are keen to use commands that get them there immediately. However, while an administrator might want to provide some ease of transportation by allowing the use of these commands, he or she might also want to deter people from using such anti-vanilla features as teleportation. Journey provides this solution, and more! Simply type a command and a path to a destination will be calculated and displayed to the user. A future direction is to enable agents to become tour guides and be able to direct students to certain locations rather than just follow players.

Plugin repository:

Journey

YouTube video explaining the project:

Tag

The agent’s tagging system uses the Signal Detection Theory to determine whether students are noticing important science features on our worlds. Students are given feedback using pattern matching to teach students and prompt further thinking about the unique phenomenon that they tagged.

Progress

Agents can also provide students with an open-learner model of student scores on researcher-designed engagement metrics. Our current measures include observation, science tool, exploration, and quest scores. The observation score pertains to the number of observations students make during a session. The science tool score measures the number of unique science tools students make on each world they visit during a session. The exploration score sums the total amount of grid positions on each world students visit during a session when maps are treated as 10×10 grids. Lastly, quest progression pertains to the total amount of missions that students complete.

Dialogue

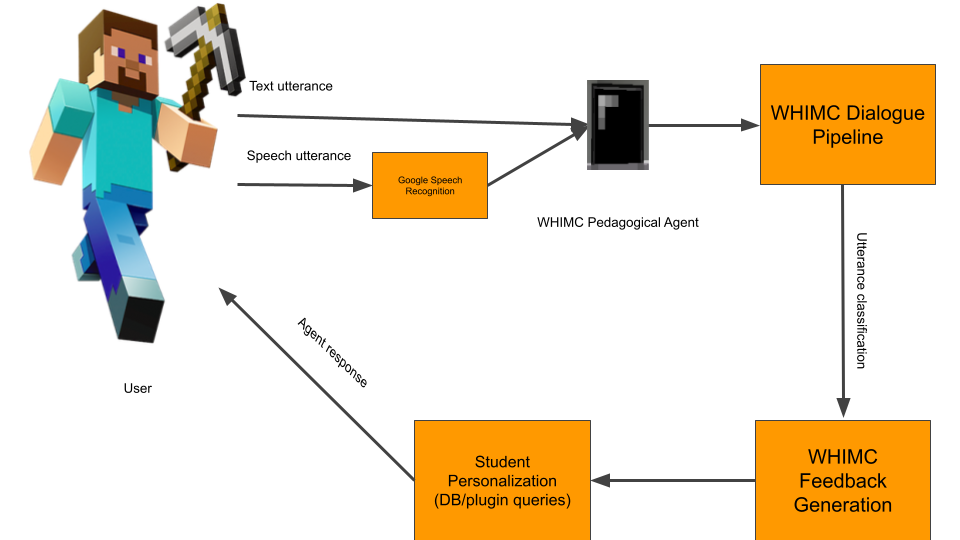

The current agent dialogue system utilizes a neural network similar to USC’s question-answer virtual humans. Our agent is trained on commonly asked questions students have on our server. Students can either enter questions or statements through text or voice using Google’s voice recognition software. The agent is also connected to our databases and plugins and can give feedback specific to individual players and environments. Future directions include redesigning from a simple question-answer to a conversational system and incorporating more abstract concepts in responses.

YouTube video explaining the project:

Reflection

Students can also reflect on the conversations (all queries and responses and which world they were on) they have with their agents during the session.

Edit

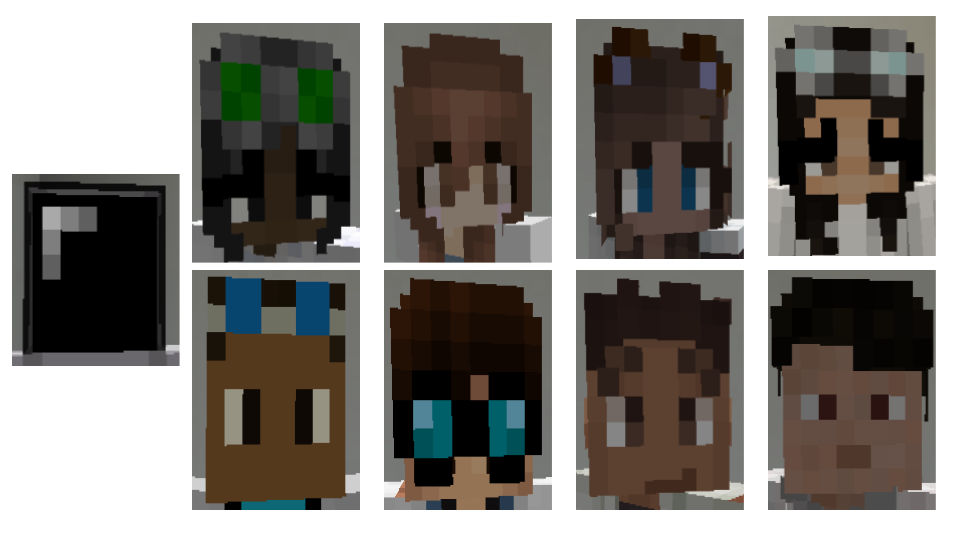

Students on our server can choose and change their agent’s appearance from 9 different agent image options and their agent’s names. Future directions will include incorporating additional images of scientists (NPCs without lab coats, more diverse images, etc.)

Observation Assessment

An important aspect of student engagement on our server are in-game observations. This section details our work towards providing support for observations using AI to be presented by the PA.

MineObserver

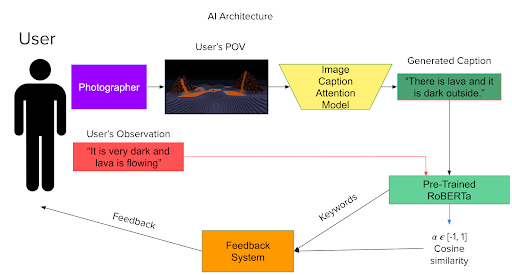

MineObserver uses state of the art methods in Computer Vision and Natural Language Processing to assess the accuracy of student observations in WHIMC. It consists of 3 major components: the student, the photographer, and the AI system. First, the student takes an observation to be assessed. Then, the photographer takes a screenshot from the student’s POV and captures their observation. Lastly, the image and observation are sent to the AI system to evaluate how accurate the observation is based on the student’s viewpoint and returns feedback to the student. The AI system is similar to Google’s show and tell image captioning software. It utilizes a convolutional neural network (CNN), specifically a long short-term memory unit (LSTM), trained on over 500 images and captions from our WHIMC server to return apt descriptions of what students are observing. We also use a pretrained language model to compare the similarity between the generated caption and the student’s observation and return feedback using keywords from the predicted description.

Help us Build the Dataset for the Next Iteration of MineObserver:

Link to provide image captions for future training data

Publication from this Work:

MineObserver: A Deep Learning Framework for Assessing Natural Language Descriptions of Minecraft Imagery

System Architecture:

YouTube video explaining the project:

Observation Classification for Bayesian Knowledge Tracing

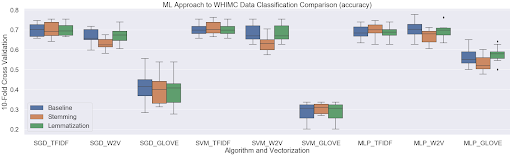

We have also created a tool to assess observation structure using Bayesian Knowledge Tracing (BKT). BKT is a machine learning approach that estimates knowledge growth in a specific domain depending on whether students can correctly solve problems when given the opportunity. Since determining correctness of student observations can be ambiguous, we decided to focus on observation structure. To do this we leveraged a machine learning model using previous work on the project, which found that students were making 6 types of observations on our server: analogy, comparative, descriptive, factual, inference, and off-topic. We then trained a variety of machine learning models with different natural language processing techniques to find the best pipeline to classify the different types of observations our students are making. Using data from previous camps and 10-fold cross validation, we found that models using non-pretrained language models performed moderately successful using accuracy, F1, precision, and recall metrics.

Model and technique accuracy comparison:

To assess correctness, we compare the student in-game observations and classifications to the predicted observation type from the machine learning model. This then updates the individual student’s learner model using BKT and the students are given correctness feedback and progress bars showing their mastery of the observation types.

Publication from this work:

Classification of Natural Language Descriptions for Bayesian Knowledge Tracing in Minecraft

YouTube video explaining project:

Habitat Analysis

During the final sessions of our WHIMC curriculum, students work in teams to create feasible Mars habitats. This section details our work to provide AI support for habitat building.

Habitat Scoring Scheme

Through collaboration with expert astronomers on the team, an extensive scoring scheme was created to ensure every part of each group’s habitat was accounted for in a fair and balanced manner, covering all possible aspects of Mars habitat building outlined during the camp process, shown in tables below. In total, 11 categories were outlined, with a three-tier system of attaching a score to each category. These tiers are classified from least score to highest score as “Basic”, “Intermediate”, and “Mastered”, each representing a level of application and mastery of the Mars habitat activity.

To ensure that the habitats that are the most accurate and scientifically sound are scored the highest, scoring weights have been applied to multiple categories, with a multiplication factor of 1.5. The weight applies to the categories of concepts that are essential for survival on Mars: atmosphere regulation, protection from radiation, food and water, supply storage, power generation, communications facilities, and rounded structure shape. Categories such as area where the base is built, combating different levels of gravity, health and wellness, and transportation were deemed as important but less essential considerations to the immediate survival of scientists inhabiting a Mars habitat.

Mars Habitat Classifiers

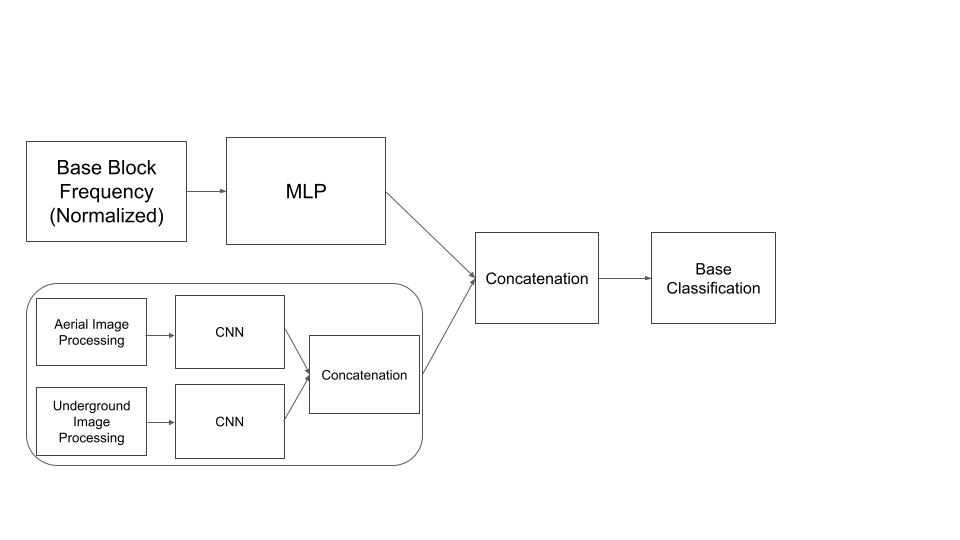

Our Minecraft plug-ins can capture images of the habitats and the blocks that were used to construct the build. It is impossible, however, for a model to predict all of the categories solely from one input type (food sources cannot be interpreted from aerial images, location cannot be interpreted from underground images, shape cannot be interpreted by block data, etc.). Thus, we took an exploratory approach using seven different input types using late-fusion concatenation to combine input data types for the 11 different habitat scoring categories described above. The models were designed to take different combinations of the three types of input: aerial images, underground images, and block data for each base. The classifiers are designed to be used by our PAs to deliver positive feedback on a highest scoring category and constructive feedback on a lowest scoring category for the group to spend more time on.

We found that models using block data significantly outperformed models that only used image or a combination of images only on accuracy, precision, recall, and F1 score. The best performing models used all three types of data, architecture shown below. These models, however, did not significantly outperform block only models. Due to deployment consideration, block only models have been integrated on our server with the PA to give real-time feedback to students.

Cluster Analysis

We also created a tool for content designers by adapting a clustering strategy with word clouds used by our partners at the Ateneo University in the Philippines. In this work, we use a mathematical approach, Within-Cluster Sum of Square (WCSS), to determine the optimal number of clusters to use for the k-means clustering of observations and science tools for each Minecraft map. Then, we generate word clouds for each observation and science tool cluster and superimpose the result on the map. In addition, we include red dots to denote positions where players have visited. The goal of this project is to determine what aspects and areas of the maps are being fully explored and what can be improved for future iterations of the project.